Overview:

Robotic soccer is a new common

task for artificial intelligence

(AI) and robotics research. The robotic soccer provides a good

testbed for evaluation of various theories, algorithms, and agent

architectures.

We're

interested in the following research issues :

-

Behavior learning algorithm

-

Adaptive vision system

-

Multiple Omni-vision sensing system

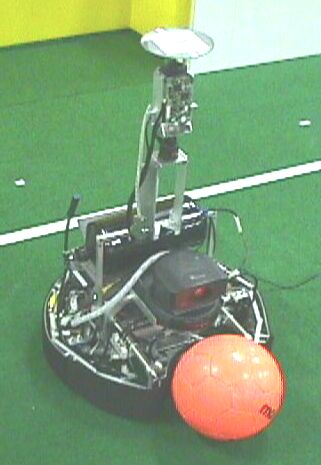

Our robot:

We

constructed a lightweight mobile robot with visual, tactile sensors, TCP/IP

communication device, and portable PC (Toshiba Libretto100) where Linux

is running.

References:

Takayuki

Nakamura and et. al,

Development

of A Cheap On-board Vision Mobile Robot for Robotic Soccer Research,

Proc.

of IROS'98, pp.431--436, 1998

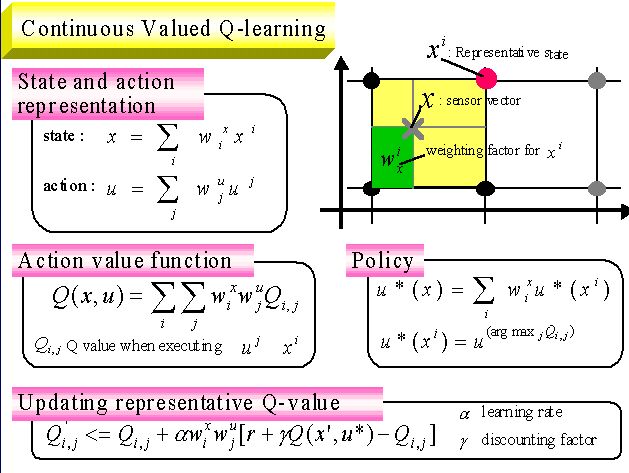

Behavior learning algorithm for mobile robot:

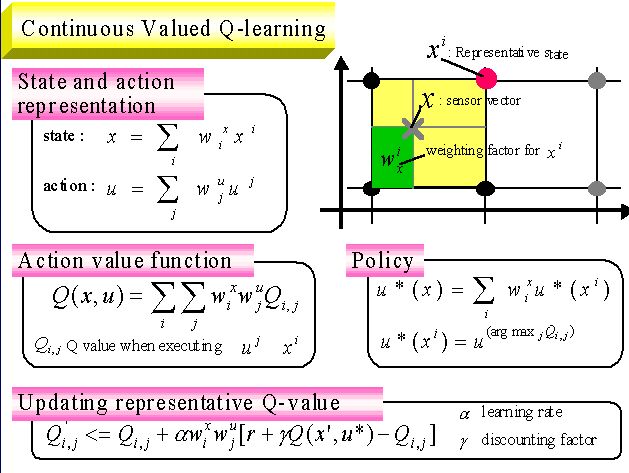

Reinforcement

learning has been receiving increased attention as a method with little

or no a priori knowledge and higher

capability of reactive and adaptive behaviors through interactions

between the physical agent and its environment. The common reinforcement

learning method like a Q-learning, normally needs well-defined

quantized state and action spacesto

converge. This makes it difficult to be applied to real robot tasks because

of poor quantization of state and action spaces. Even if it can be applied

to real robot tasks, performance of robot behavior is not smooth, but jerky

due to quantized action commands such as forward, left turn and so on.

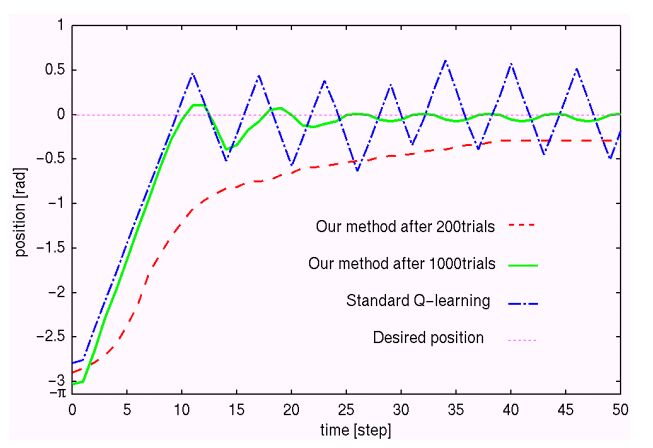

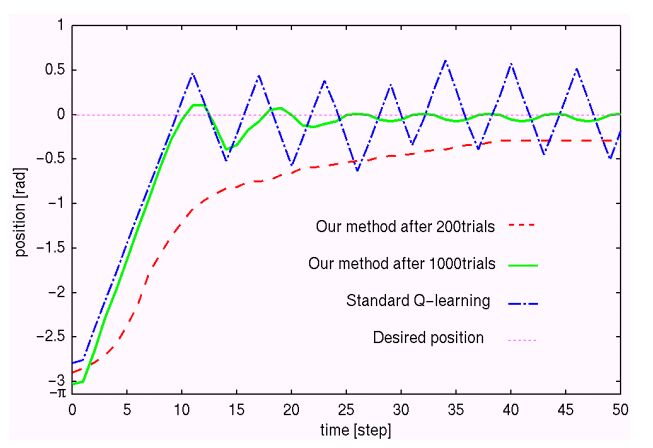

To deal with this problem,

we proposed a continuous valued Q-learning (hereafter, called CVQ-learning)

for real robot applications. This method utilized a function approximation

method for representing a action value function. In this work, we point

out that this type of learning method potentially has a discontinuity problem

of optimal actions given a state. To resolve this problem, we also proposed

a method for estimating where discontinuity of optimal action takes place

and for refining a state space for CVQ-learning. To show the validity of

our method, we apply the method to a vision-guided mobile robot of which

task is to chase the ball. Although the task is simple, the performance

is quite impressive.

References:

M. Takeda,

T. Nakamura, M. Imai, T. Ogasawara and M. Asada,

Enhanced

Continuous Valued Q-learning for Real Autonomous Robots,

Proc.

of Int. Conf. of The Society for Adaptive Behavior 2000,

pp.195--202,

2000.

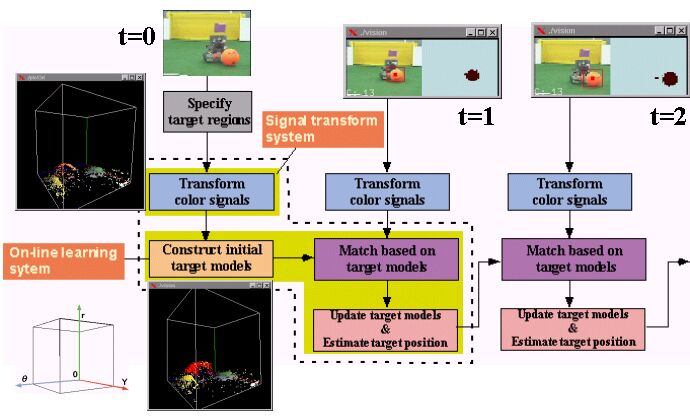

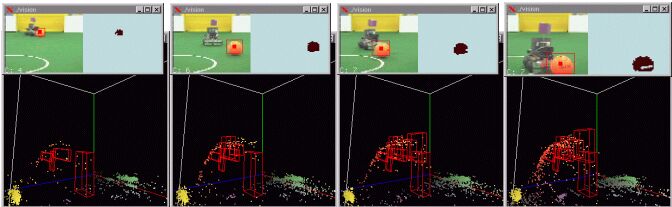

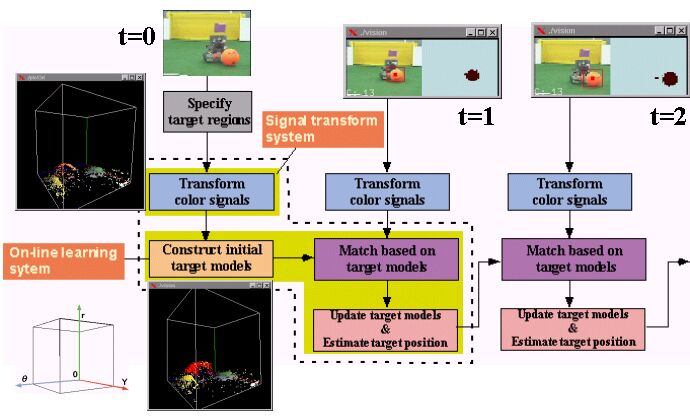

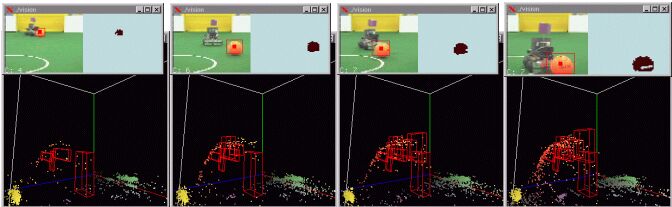

Adaptive vision system:

Robust

visual tracking is indispensable for building up vision-based robotic system.

The hard problem in visual tracking is performing fast and reliable matching

of the target every frames. A variety of tracking techniques and

algorithms have been developed.

Among them, color-based image segmentation

and tracking algorithm seems to be practical and robust in real world,

because color is comparatively insensitive to the presence of changes in

scene geometry and occlusion.

Most of existing mTakayukiethods

need bootstrap process to model sample color distribution for image

segmentation. That is, in order to calibrate their statistical model

for image segmentation, these visual tracking systems need to wait for

color data to accumulate enough even if these systems work in dynamic environment.

In order to keep visual tracking systems running in real environment,

on-line learning method for acquiring some models for image segmentation

should be developed.

We developped an on-line

visual learning method for color image segmentation and object tracking

in dynamic environment. Such on-line visual learning method is indispensable

for realizing a vision-based system which can keep running in real world.

To realize on-line learning, our

method utilizes fuzzy ART model which is a kind of neural network for competitive

learning. Although color image we deal with is represented by YUV

color

space, YUV

space is not suitable for inputs of fuzzy ART model.

For this reason,

YUV space is transformed to a certain color

space. This transformation enables fuzzy ART model to segment color image

in on-line. As a result, even if surroundings such as lighting condition

changes, our on-line visual learning method can perform color image segmentation

and object tracking correctly.

References:

Takayuki

Nakamura and Tsukasa Ogasawara,

On-Line

Visual Learning Method for Color Image Segmentation and Object Tracking,

Proc.

of IROS'99, pp.222--228, 1999.

Multiple Omni-vision system:

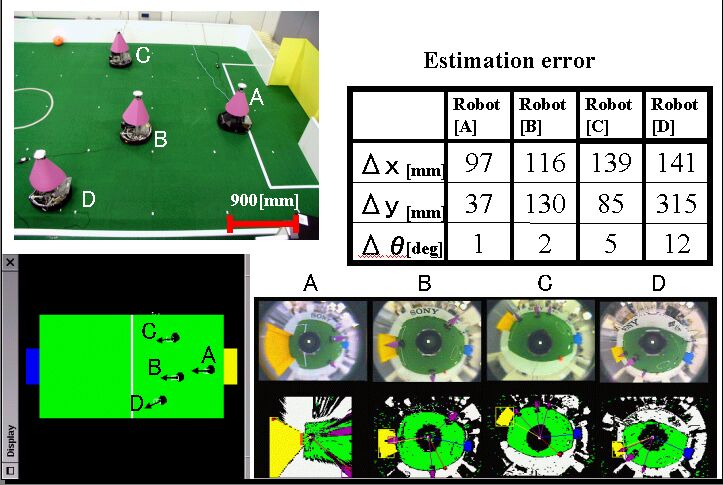

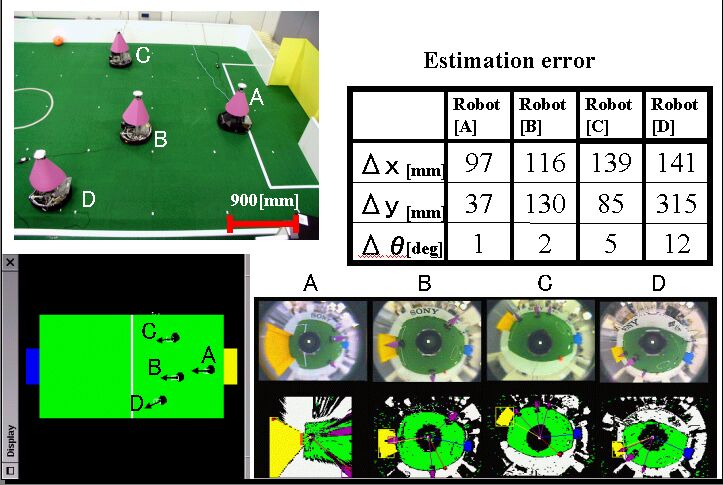

In

order that multiple robots operate successfully in cooperative way,

such robots must be able to localize themselves and to know where other

robots are in a dynamic environment. Furthermore, such estimation should

be done in real-time. An accurate localization method is a key technology

for successful accomplishment of tasks in cooperative way. Since robots

in the multiple robot system can share the observations by communicating

each other, each robot in such multiple robots can utilize redundant information

for localizing itself. Therefore, such robots can solve the localization

problem more easily than a single robot does. On the other hand, in multiple

robot system, it is difficult to identify other robots by using only visual

information.

We developped a new method

for estimating spatial configuration between multiple robots in the environment

using omnidirectional vision sensors. Even if there was an obstacle in

the environment where the multiple robots were located, our method could

estimate absolute configuration between robots in the environment with

high accuracy.

Our method is based on identifying

potential triangles among any three robots using the simple triangle constraint.

In this work, in order to enhance functions of autonomous decentralized

system, our method gives a self-localization capability to a single robot

among multiple robots, in addition to capability of estimating a relative

configuration from the sharing observation. Due to this self-localization

capability, our method can use one more constraint which is called "enumeration

constraint." This constraint drastically eliminates impossible triangles

and makes our algorithm fast and robust.

After potential triangles are identified,

they are sequentially verified using information from neighboring triangles.

Finally, our method reconstructs absolute configuration between multiple

robots in the environment using the knowledge of landmarks.

References:

T. Nakamura,

A. Ebina, T. Ogasawara and H. Ishiguro,

Real-time

Estimating Spatial Configuration between Multiple Robots by Triangle and

Enumeration Constraints,

Proc.

of IROS 2000, pp.2048--2054, 2000 .

T. Nakamura, M. Oohara, T. Ogasawara and H. Ishiguro,

Fast self-localization method for mobile robots using multiple omnidirectional

vision sensors.

Machine Vision and Applications, Vol.14, No.2, pp.129--138, 2003.